Lately, I’ve been neck-deep in all things artificial intelligence. I’ve been reading, writing, and pontificating about how AI is revolutionizing education, shaking up fine art, and generally making us all wonder if we’ll soon be replaced by robots who not only grade essays but paint masterpieces. But as dazzling as AI’s potential may be, let’s not ignore the robotic elephant in the room: AI has a dark side, and it’s not lurking in the shadows—it’s right in front of us, pulling off scams that make even seasoned con artists blush.

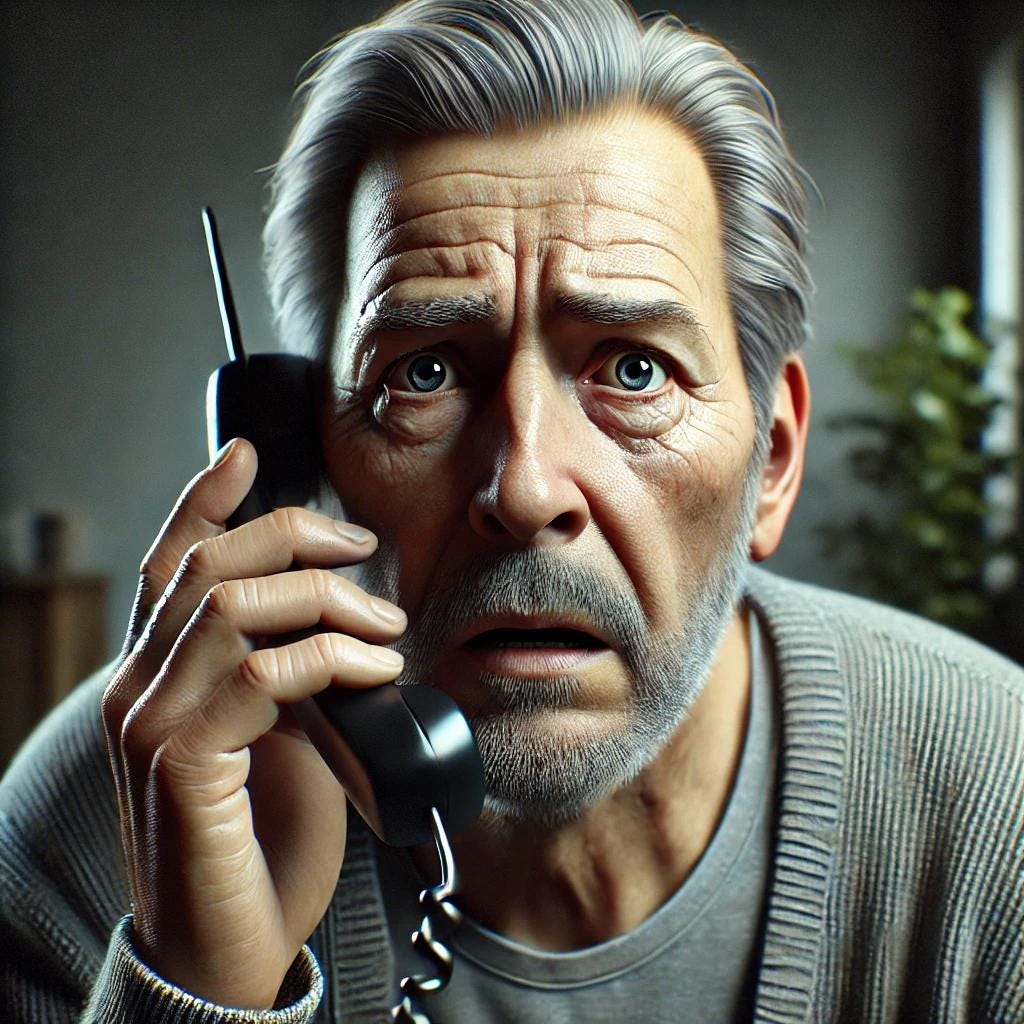

Take Anthony, for example, a father from California who received a call from his son. The voice was panicked, pleading, claiming to be in trouble after a car accident. Naturally, Anthony didn’t think twice before wiring $25,000. Turns out, his son was fine—at home, watching TV. The scammer, however, had been busy using AI to clone his son’s voice perfectly. Talk about a plot twist. (Source: New York Post)

And then there’s the woman who thought she hit the jackpot when “Elon Musk” himself appeared on a video call, complete with AI-generated charm and charisma. They didn’t date for long—just long enough for him (or, rather, it) to clean out her savings. The kicker? This fake Elon was so convincing that I might have even handed over my wallet. (Source: CNBC)

Deepfakes aren’t just for romance scams and fake family emergencies. Remember those viral images of Donald Trump being arrested? Millions believed them, sparking chaos and confusion. Of course, they were entirely fabricated by an overzealous AI that moonlights as a political prankster. (Source: The Guardian)

Voice cloning is equally terrifying—and strangely impressive. All it takes is a few seconds of someone’s audio, and voilà: you can now impersonate them with uncanny accuracy. A Canadian couple fell victim to this when they got a call from their “grandson” asking for bail money. They sent thousands, only to later discover their beloved grandson was safe and sound—and AI had taken them for a ride. (Source: CBS News)

And just when you think you’ve heard it all, criminals have turned to AI for... music fraud? That’s right. Scammers create fake music tracks and use AI to inflate streams on platforms like Spotify. It’s like Milli Vanilli meets a computer science degree. (Source: Rolling Stone)

AI even dabbles in fake journalism. In China, a man used ChatGPT to fabricate a story about a train crash, complete with all the drama and none of the facts. He didn’t just stir up fear—he also landed himself in jail. (Source: BBC)

The scariest part? The law hasn’t caught up. How do you prosecute a robot? How do you prove intent when the “criminal” is an algorithm? And let’s be honest—how do you explain this to your grandma without sounding like you’re pitching the next “Terminator” sequel?

It’s easy to laugh at the absurdity of some of these crimes, but the implications are serious. As AI becomes more advanced, these scams will get more complicated to spot. We live in a world where you can’t always trust what you see, hear, or believe.

AI is an incredible tool that is transforming education, art, and countless other fields. But as these stories show, it’s also rewriting the rulebook for crime. The question is, how do we respond? We can’t just sit back and hope for the best while Elon Musk’s AI doppelgänger takes over. It’s time to get serious about understanding this technology, setting boundaries, and ensuring that we're not the ones getting scammed in the battle between humans and machines.

Because let’s face it—losing your wallet to a fake Elon is one thing. Losing our ability to trust? That’s a whole different story.